PI's response to DCMS' Online Advertising Programme consultation in the UK.

The ministerial Department for Culture, Media and Sport (DCMS) in the UK recently ran a consultation to review the regulatory framework for paid-for online advertising. The aim according to DCMS is "to tackle the evident lack of transparency and accountability across the whole supply chain."

PI has long been concerned about the online advertising industry, particularly the harm caused by adtech companies and data brokers in the advertising supply chain. PI welcomed the opportunity to respond to the Online Advertising Programme (OAP) consultation.

- For some time now, PI has been concerned by the explosion of the online advertising industry, fueled by people’s personal information, often known as “behavioural advertising”, in particular the actions of “ad tech” companies and “data brokers” and their role in the advertising supply chain.

- Through our investigations we are shocked to see how intrusive and harmful behavioural advertising has become. We have evidence of personal information being collected from, for example, women who have just given birth and users of mental health websites which is then shared with third parties without their knowledge. This sensitive information can then be combined with other information in order to build “profiles” of people and target them with adverts online.

- We have been campaigning for improvements in the industry and to curb its negative impacts on people.

- While we support this proposal and agree with the rationale for intervention, as a starting point we would like to see existing regulation (such as the UK GDPR) be properly and regularly enforced. We would rather resources were focused on enforcing existing data protection standards, and as a result that more investigations are opened into intermediaries and platforms. All the measures outline in the OAP should work hand in hand with the UK GDPR, as reinforcements and compliance incentives rather than alternative standards.

In our submission we outline our concerns with the industry as a result of extensive technical research and complaints taken to data protection authorities in Europe as a result.

Data brokers must specifically be included in "actors in scope."

We recommend that "data brokers" are specifically included in the list of "actors in scope". A data broker is a company that collects, buys and sells personal data and this is often how they earn their primary revenue. It is a term that is entering public understanding after long campaigns by organisations like Privacy International. We are concerned about the lack of transparency of the data broker industry, in particular as the data collection and profiling performed by data brokers is mostly invisible to the user. The majority of the companies involved are unlikely to be household names and the average user is unlikely to have a direct relationship with them. But their influence and impact on the advertising ecosystem cannot be overstated - the vast majority of the harms caused by online advertising that the proposal seeks to tackle are caused by the source, nature and exploitation of the personal data that feeds advertising placement decisions. Most of this data is either sourced from or enriched by data brokers. Data brokers are notoriously opaque and it is imperative that the UK's proposal makes these companies visible due to the central role they play in the industry and, in our opinion, the source of many harms the proposal intends to tackle.

In addition, some well known companies also operate data broker services for marketing and it is important to recognise this too. Therefore we recommend that public facing examples of data brokers are also included, such as credit reference agencies and parenting clubs.

For more information and examples of such data brokers and how they compound harm in the online advertising ecosystem, please see the timeline of complaints against the ad tech industry we have compiled, and our case page on the complaints we filed against data brokers, ad-tech companies and credit referencing agencies. Some of these complaints are still under investigation by data protection authorities. While the GDPR has been crucial in reining in these companies' practices, it is essential that their role in the online advertising ecosystem is understood and tackled by the Online Advertising Programme.

We understand that the ICO is "examining the use of ad tech in the targeting of adverts to consumers through programmatic advertising", hence that "Privacy issues" have been excluded from scope of the OAP. While we welcome this ongoing work by the ICO, the online advertising ecosystem cannot be regulated in a piecemeal fashion. The harms that the OAP is seeking to remedy all stem from an interconnected web of actors and practices that cannot be grappled with in isolation from each other. We would therefore encourage the OAP to include privacy issues within its scope, or at a very minimum recommend that the ICO be fully involved in the development of the OAP.

We would also like to comment on the exclusion of "Political advertising" from scope of the OAP. While we understand the rationale for the exclusion, we consider that the various harms at play in political advertising stem from the same mechanisms and controversial practices involved in general advertising, in particular excessive data collection and profiling. Regulation of political advertising would therefore highly benefit from being considered in conjunction with other issues covered by the OAP.

Does the consultation capture the main market dynamics and describing the main supply chains?

Overall this is a clear and helpful description of market dynamics and the main supply chain.

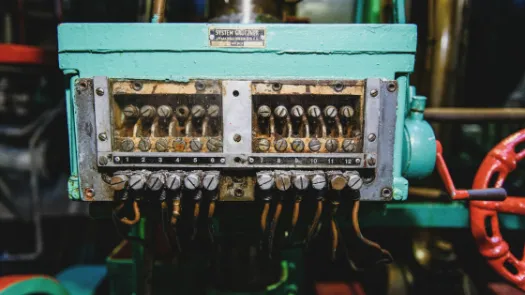

However, in line with our response to question 3, we would like to see more mentions of data brokers in the descriptions of these market dynamics and supply chains. They are referred to (as "data suppliers") only once in this section: "The industry also includes further market participants involved in the provision and management of data, targeting practices and analytics in online advertising including data suppliers, data management platforms (DMPs), and measurement and verification providers." Their denomination as "further market participants" ignores the fundamental role they play in shaping the delivery of online advertising.

Does the consultation describe the main recent technological developments in online advertising?

In our view, data collection is widespread and out of control, and this is the central problem to tackle in order to reduce harm. Data collection is the first step in a long and opaque process. Many technological developments attempt to find palatable ways of maintaining the status quo of collecting much more personal data than is needed for targeting advertising without transparency or accountability. Such is the case with the widespread integration of third-party SDKs (Software development kits) within mobile apps for example, which can be used to stealthily collect massive amounts of data, including user location. The average Android app will use around 15.6 third-party SDKs and a lot of these will be available to developers for free as a mean to monetize their app in exchange for the information they can collect from the apps where they're used, or a cut of the ads they can sell through them. There have been countless stories of data being collected in this way and later sold and re-purposed in a harmful way. As an example, Vice reported that data collected from a Muslim Prayer App had been sold to a US military counter-terrorism division in order to assist with overseas operations.

This is yet another manifestation of the central issue of indiscriminate and opaque online data collection that is eroding the trust of users, leading to “Consumers feel[ing] they are being “stalked” around the internet” as stated in the introduction of this proposal. What is to be avoided is a "cat and mouse" game of technological developments, for example trackers and ad blockers, without the central problem of excessive data collection being tackled.

Does the taxonomy of harms cover the main types of harm found in online advertising, both in terms of the categories of harm as well as the main actors impacted by those harms?

The taxonomy of harms is well put together and covers many consumer issues and concerns that are outside the scope of PI's work, but which we support.

We would argue however that harms do not stem from ads targeting alone, or the content of adverts. There are many steps in the process before adverts are served in a targeted manner. Targeting is enabled by data collection. The data collection itself is a harm. Put simply, what personal data has been collected that allows a person to be targeted with this kind of harmful content in the first place? The stages of targeting could be broken down as:

i) Data collection: Methods of collecting or obtaining personal data, including through hidden means such as trackers placed on the websites you visit/ apps you download or from open sources such as voter registries, social media or buying databases and profiles from other sources such as data brokers.

ii) Profiling: This involves dividing users into small groups or “segments” based on characteristics such as personality traits, interests, background or previous online behaviour.

iii) Personalisation: this involves designing personalised content for each segment.

iv) Targeting: Personalised content is distributed using online platforms to reach the targeted group with these tailor-made, targeted messages.

Across platforms, users have little clarity as to the following:

- Data collection methods behind the targeting

- What type of data is collected (this usually includes sensitive data)

- What type of data was used to place that ad (Why am I seeing this ad?)

- Where that data came from

- If, by who and for what profiling is used

- The level of detail of profiling practices and an individual's (multiple) profiles

- How the individual's profile was used

- The categories of data that were inferred, from what data, and on what basis

- What data was inferred and why

- Segments/attributes that the person was linked to for the purposes of that ad, and why that happened

We note that "data policy" is out of scope in this consultation and tackled in "Data: A New Direction", to which PI also submitted comments. However, if the problems in the industry are to be tackled, they must be tackled as a whole and at least acknowledged here, particularly as the proposal frequently references the "supply chain." It is unfortunate therefore that "data policy" as these areas are described is out of scope for this proposal.

When referencing scam or fraudulent adverts, the number 1 consumer harm, it must be acknowledged that each actor uses the same tools and methods (for example real time bidding (RTB)), whether criminal or legitimate, to target adverts. Therefore, we cannot address the problem without tackling the whole supply chain and creating accountability at each stage.

We would also like to point to a category of harm that is not clearly included in the current list - misinformation. The harms of misinformation differ from "Misleading adverts", the latter referring to making false or misleading claims about certain products and services, while misinformation refers to the wider type of harm that includes distribution of incorrect or misleading information about a wide range of issues such as climate change, abortion, immigration, etc. As an example, the recent Eco-bot.net project showcases the spread of such disinformation campaigns on Twitter in the context of climate change. Additionally, these types of ads often target people on the basis of sensitive data (e.g data from period trackers can be used to deliver ads to pregnant women to discourage them from seeking an abortion). This is somewhat covered under "Discriminatory targeting" but could be made more explicit.

The description of the range of industry harms that can be caused by online advertising.

We would like to add that Tracking and identification of users hurts big publishers and that Real Time Biding data leakage often supports untrustworthy websites. Because the same trackers can be embedded on reputable websites and less reputable ones, advertisers can pay to display their ads on the latter ones instead, once a person has been targeted and identified as a valuable customer. As an illustrational example let's say a user visits a reputable publisher's website. Displaying an ad there would cost GBP1 because it’s a reputable publication. But that same person has some cookies following them around the web and has been now been identified. Thanks to these cookies identifying this high value customer, the advertiser that wants to target them with an ad can simply do so at a lower cost, paying GBP0.01 on a less reputable site that the person visits. While this might sound good for the advertiser, the consequence is the defunding of quality journalism and impacting big publishers while deviating funds to other sites.

Are the main industry initiatives, consumer tools and campaigns designed to improve transparency and accountability in online advertising accurately captured in the consultation?

We note the reference to the Internet Advertising Bureau's (IAB UK) Gold Standard, and would like to comment on the framework they have developed for obtaining consent from consumers to the placing of cookies on their members' websites. Cookies are a crucial mechanism through which personal data is collected from consumers, in order to be shared with the adtech ecosystem. The Belgian data protection authority, in collaboration with its counterparts in other European countries, has recently found that the IAB's Transparency & Consent Framework (TCF) violated the GDPR, in particular that the TCF may lead to a loss of control by citizens over their personal information. IAB was ordered to delete all personal data collected through this framework. While this decision is not applicable in the UK since we left the EU, at this stage the legal framework remains the same, and therefore the TCF would also be found unlawful under the UK GDPR. We therefore recommend that the OAP considers the IAB's standards and practices in light of this decision, as the quality and legitimacy of the vast amounts of data collected through the TCF now taints the entire online advertising ecosystem.

Should advertising for VoD closer align to broadcasting standards or follow the same standards as those that apply to online?

We believe advertising for VoD should follow standards closer to online advertising rather than broadcasting standards. This is because VoD platforms allow for consumer interaction, which allows for data collection and consequent targeting of advertising. Broadcasting presents, similarly to newspapers, a uni-directional flow of data (the user cannot interact), while the online and VoD platforms allow for interaction and do consequent targeting based on data collected from those interactions.

PI agrees with DCMS' rationale for intervention, in particular that a lack of transparency and accountability in online advertising are the main drivers of harm found in online advertising content, placement, targeting, and industry harm.

This rationale aligns with PI's rationale for interventions we have made over the years. We too agree the lack of transparency and accountability are the main drivers of harm, allowing an ecosystem to develop that is opaque and complex and impossible for a data subject to navigate.

The Digital Services Act (DSA), currently being negotiated in the EU, is a good example of legislation that recognises the need for better transparency and accountability around digital advertising. We recommend that the OAP follows a similar approach.

PI disagrees that the current industry-led self-regulatory regime for online advertising, administered by the ASA, to be effective at addressing the range of harms identified.

While the current regime administered by the ASA has been useful in regulating harmful advertising generally, it is ill-suited to the complexity of the online advertising ecosystem. This complexity allows actors in the industry to use practices that perpetrate harm to consumers in an invisible way - such as, as explained above, through the targeting of adverts based on sensitive or illegally collected personal data. This is why we welcome the OAP's initiative to tackle online advertising as a separate issue with its own idiosyncrasies.

PI does not consider that the range of industry initiatives described in section 4.3 are effective in helping to address the range of harms set out in section 3.3.

As described, a central problem with the industry is the lack of transparency and accountability to the extent that the full range of harms is only just being discovered. PI uncovers harms every time we investigate the industry (see evidence in the "Impact Assessment" section) and this is why we advocate for the proposal to look at the targeting process as a whole.

PI believes "Backstopped regulation for all or some higher risk areas of harm" is the appropriate level of regulatory oversight for advertisers.

Through our engagement with wide range of advertisers, we see an acknowledgment and understanding of the scale of the problem. We now need advertisers to come together as an industry and publicly demonstrate how they are tackling the problem and making positive changes to their advertising practices in order to erase the harms. It is in their interests to do so, for all the industry harms outlined in the OAP, but also because they hold the power to force a huge shift. We need to see movement on their part. In the short term we would support backstopped regulation to address certain types of harm, as certain advertisers will always seek to develop harmful content (e.g. body shaming or sexist ads). But if the industry does not urgently make more comprehensive industry changes to the way it feeds the adtech ecosystem, we would move to a position of statutory regulation.

PI believes "Statutory regulation" is the appropriate level of regulatory oversight for platforms.

Online platforms' revenue nearly exclusively relies on online advertising revenue. They therefore have very little incentive to reduce the granularity of profiling and targeting that efficient ad delivery relies on, as they can sell this to their advertiser clients as their main competitive advantage. Statutory regulation is therefore the only efficient solution to regulate the numerous harms that come from their dominance on the online advertising market.

PI believes "Statutory regulation" is the appropriate level of regulatory oversight for intermediaries.

Due to the lack of transparency and accountability in this part of the industry in particular, it is our view that self-regulation is not appropriate here. A clear message needs to be sent that the status quo cannot continue, and accountability must be assured.

PI's views on a mixture of regulatory oversight.

Different levels of regulatory oversight may be warranted for different actors, as their roles in the online advertising ecosystem differ greatly, as do their resources and ability to effect change. However, we would warn against different levels of regulatory oversight for different types of harm, as this risks omitting from scrutiny certain harms that are either invisible or do not yet exist. PI keeps uncovering in its investigative works new types of harm - such as from the collection of data from menstruation apps - that had not been detected before. The highest level of regulatory oversight should therefore be required across the ecosystem, especially regarding the heart of the online advertising ecosystem - data collection, profiling and targeting.

We generally support explicit obligations on intermediaries to play a role in ensuring that the CAP Code in place for advertisers is being effectively applied, as well as the alignment of any reforms with the e-commerce intermediary liability regime.

While we support this proposal and agree with the rationale for intervention, as a starting point we would like to see existing regulation (such as the UK GDPR) be properly and regularly enforced. We would rather resources were focused on enforcing existing data protection standards, and as a result more investigations opened into intermediaries and platforms. All the measures outline in the OAP should work hand in hand with the UK GDPR, as reinforcements and compliance incentives rather than alternative standards.

IMPACT ASSESSMENT QUESTIONS:

Here we outline some of PI's investigations and resulting action by regulators:

Your Mental Health For Sale: PI analysed 136 mental health websites in France, Germany and the UK. We found that 76% contained third party trackers for marketing purposes. We found that several online tests for depression shared the actual test answers with third parties. This is sensitive information that the average user would not expect to be shared with a large number of companies and that is fed back into the online advertising industry.

An Unhealthy Diet of Targeted Ads: More and more companies selling diet programmes are targeting internet users with online tests with little to no clarity when it comes to what happens to your data. These tests request sensitive personal data about your medical history and mental health.

For at least two of the programmes we looked at, the data we entered did not affect the programme we were being sold, raising questions as to why the data is collected in the first place. We conducted traffic analysis to find out what happens to the data and discovered that one of them actively collected and shared sensitive personal data, while the poor security practices of the others meant the data was de facto accessible to third parties.

Bounty, a parenting club in the UK, was fined by the ICO in 2018 for unlawfully sharing personal data of 14 million mums & babies with 39 companies, but that data is still out there likely being sold & resold.

PI's Facebook SDK research highlighted the platform's thirst for more data to make its advertising offer more valuable.

Some of PI's actions:

Complaints filed against 7 data brokers/ad tech/credit ref agencies in 2019.

Data Protection Authority (DPA) action as a result: