In Defense of Offensive Hacking Tools

Why would we ever let anyone hack anything, ever? Why are hacking tools that can patently be used for harm considered helpful? Let's try to address this in eight distinct points:

1) Ethical hacking is a counter proof to corporate claims of security.

Companies make products and claim they are secure, or privacy preserving. An ethical hack shows they are not. Ethical hackers produce counter-proofs to government or corporate claims of security, and thus defend us, piece by tiny piece.In other words, the absence of evidence is not evidence of absence of vulnerabilities. We prefer companies with products that have had vulnerability fixes over those that say they have no problems at all.

Science progresses slowly, but it does progress. It does so because of 'falsifiability', and counter proofs.

Karl Popper opened his magnum opus on science and falsifiability, 'The Logic of Scientific Discovery' with a quote from Oscar Wilde: "Experience is the name every one gives to their mistakes." In this book Popper carefully explains that scientific theories cannot be proven true by experiments, but they can ONLY be proven false. He describes 'universal statements' (such as 'All swans are white'), and Carnap's work on probability and falsifiability. By contrast an 'existential statement' claims a state of affairs exists at sometime and someplace, for example 'there exists a black swan'. Note that these two statements about swans are denials of each other. It is much easier to confirm an existential statement than a universal statement.In order to confirm that a black swan exists we would only need to see one single black swan. In order to confirm that all swans are white exist we would need to verify every swan that ever lived. While it might be possible, it is practically impossible.

Now consider how this impacts security or privacy:

Software companies make universal statements, and hackers make existential ones:

Company: Our software is unbreakable.

Ethical hacker: Your vulnerability report statistics look like a hockey stick.

In other words, people who believe in the blackness of swans get 'lucky' because they are wise enough to look for black swans. Security and privacy engineering are nascent sciences, and we desperately need these counter proofs of hackability. These proofs take the form of exploits, and though they may indeed be used for evil, they may also be used for great good.

2) Tools make the theoretical into real risks that have to be addressed.

Exploits are experiments, and being able to ship them around the world to others who study them is very valuable. Just as scientists and students learn from repeating experiments, so too do security engineers learn from sharing hacking tools. Experiments need to be conducted safely, and ethical considerations must be aired and discussed, but we do not ban all experimentation to protect against people making pipe bombs.

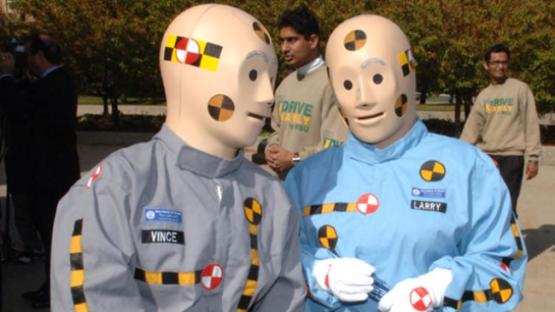

Likewise the ethics of exploitation is much in need of discussion, and at Privacy International we welcome that discussion, but we also recognise the science of security grows from hacking tools being shared with ethical security researchers. In other words, in computer security and privacy, defense is the child of offence. You cannot make cars safe without crash testing them, and likewise you cannot secure a computer without studying how it crashes.

3) Free tools teach the next generation. We do not want security and privacy only for the 1%.

A lot of time and money in the human rights community is being pumped into operational security (OPSEC) training, often on using encryption and other tools. How did the trainers get trained? They learned to hack, understood the risks, and adapted to the scale of the problem.

If we spend more time and energy on digital literacy, and de-stigmatise hacking, the beneficiaries will adjust their OPSEC daily, or hourly without our intervention or authority. It could change the world if we do this carefuly. Rather than the tyranny of techies telling you which app to use, we could be teaching people enough about coding, networks, surveillance history, and information security that they can decide for themselves, and adaptively - empowering consumers, not tyranising those new to the internet with Fear, Uncertainty, Doubt, and specific product solutions that may or may not suit them.

Tools help in this regard, too. Many a student has started encrypting and signing email after I showed them how to spoof them.

It is easy to forget the internet was invented with typewriters and a postal system. It has literally transformed itself continuously since it's early adoption. That has happened because of digital literacy, and providing tools to people in distant parts of the world who in turn wrote their own tools. People in diverse communities used the internet differently than expected, but that is the point. The internet is capable of great diversity. It can educate, or it can destroy. It reflects the world, because we reflect the world. It is hair splitting of epic proportions to try to distinguish the tools that built the internet from those used for hacking. For example, a compiler is a tool that computers use to educate humans in how to speak their language (painfully so in my particular case). However compilers are also the source of malware. We give people compilers with many operating systems for free.

We should also teach people to wield hacking tools ethically and with thoughtful intentions.

4) Driving research into unethical and unobservable markets is harmful.

At the highest possible abstraction information asymmetry is the driver of harm in hacking.

When someone has access to your devices, networks, or data, without your knowledge, you go about your daily business without knowing that your mail is being read, your credit cards used, or your computer is abusing someone else's website. This asymmetry is what we want to prevent - we want violations of our privacy and security to be observable. We want to know how much it costs to compromise a particular piece of software, and how it was done.

Criminalising and stigmatising hacking drives it into less ethical communities. It is the use of knowledge, not the possession of it that makes people dangerous. If you stigmatise and outlaw knowledge, then the only place to learn will be prison, and the ethical campaign for hearts and minds will be lost accordingly.

5) The ethical hacking community is wrestling with its' new found relevance.

This community used to more obscure, more marginalised, until society started to wake up to the risks. The risks are here, and detailed widely on this blog or the mainstream news already. The point is simply that the ethics of that community were refined in intense isolation, over 30-40 years. This community can seem to be techno-negative, but in fact represents a sort of depressive realism about technology. Most people buy a gadget without thought to how it works, or where the data goes and what other uses it is put to. Ethical hackers seek to understand that technology which the rest of society consumes.

The art here is to be less elitist about the debate as technologists, and for those new to the debate not to trivialise the techhnology or those who came to the debate 30 years earlier.

The ethical hacking community IS an emergent form of regulation, keeping companies and governments accountable for their safety, security, and privacy claims (see '1' above). Like virologists, technologists can either create vaccines or biological weapons, and those ethical norms and divisions are still forming. It is not the acquisition of knowledge that should be marginalised or criminalised, but rather the application of that knowledge in a rapidly shifting world.

For example, I have always hated the "black hat/white hat" characterisation of morality, primarily because it is a cartoonish and monochrome version of my everyday ethical decisions. I doubt all the colours in the electromagnetic spectrum could capture the nuances of the decisions I had to make about disclosing critical infrastructure vulnerabilities and infected systems around the world.

JOIN this debate rather than imagine it doesn't happen without you... Encourage and reward those that perform the technological equivalent of eradicating polio, instead of imagining we all live in basements and break the law with wild abandon. As unto Salk open sourcing the polio vaccine, I share vulnerabilities and exploits to improve the world, not to watch it burn. I know I don't have the cure, but I can reduce the harm. Recognise the role models you already have instead of imagning they don't exist.

6) Detecting exploits saves lives. You can't detect what you don't know exists.

An exploit is surprising, unless you've seen them for years. The art is in identifying the similarity in many of them, and then using this to advance the science of cyber defenses. Like the adaptive immune system of the body, exploits become part of the immunological memory of the network.

It is by exposing the exploits to the disinfectant of sunlight that we defund and deprive our adversaries of their tools. In a network of civil society and charities, that herd immunity comes from sharing samples safely, not from outlawing them. Detecting exploits against NGOs and charities literally saves lives, and I am proud to be doing such work.

7) Hacking is all about information asymmetry. Reduce that asymmetry as a design principle.

The harm of hacking flows primarily from information asymmetry. The vendor is not told of the exploit, the victim cannot detect the harm until it is too late. The civil society counter-hacker does not know what exploits a government will deploy against them, and the attackers do not know what defenses await them.

As a design principle for civil defense, we should reduce such uncertainty. Know the price of exploits, write about it. Know how they work, and write some yourself. You might just be as lucky as I am and patch something that saves someone's life one day.

8) Nations states hacking each other is very different to nation states hacking their citizens. Or even worse, each other's citizens.

According to a UN report we know at least 27 countries are funding offensive cyber capabilities. Of course many more are likely to have followed suit in the intervening period:

Indeed a more recent WSJ article, mentions 60 countries that have offensive programs

This makes sense when you take into account hacking as espionage. However, the power of nation state hacking doesn't seem to have any checks and balances.

Hacking is also being used in law enforcement, and indeed extra-jurisdictionally. Let me put this simply: Do you trust *every* police force in the world enough to read all your emails and also protect your human rights at the very same time? Because that is the international precedent the FBI was setting in the FBI-Playpen case, where it engaged in hacking computers and networks all over the world

What is missing from all of this, is quite simply consumer protection. That protection will emerge from the open rapid adaptive immune response and experimental science. It will take time, but it will come to pass.

In the meantime, do not confuse the civil society defending technologist with the information asymmetry increasing zero day vendor. One defines security as a public good and releases exploits transparently for science, the other keeps them secret for profit and chooses to turn a blind eye to the ethics of who uses them and how.

Eireann Leverett