Benefitting whom? An overview of companies profiting from “digital welfare”

Could private companies be the only ones really profitting from digital welfare? This overview looks at the big players.

- Companies like IBM, MasterCard and credit scoring agencies are developping programmes that reshape our access to welfare.

- A particular example is the case of the London Counter Fraud Hub where an investigation by Privacy International revealed how several London councils (Camden, Croydon, Ealing and Islington) were exploiting data about their own residents and using data from credit scoring agencies to track people suspected of housing fraud.

All around the world people rely on state support in order to survive. From healthcare, to benefits for unemployment or disability or pensions, at any stage of life we may need to turn to the state for some help. And tech companies have realised there is a profit to be made.

This is why they have been selling a narrative that relying on technology can improve access to and delivery of social benefits. The issue is that governments have been buying it. This narrative comes along with a discourse around fraud detection and how technology could be used to spot so-called “benefits cheats.” In his report on Digital Welfare, released in October 2019, the UN Special Rapporteur on Extreme Poverty addressed what he referred to as the “irresistible attractions for governments to move in this direction.”

“Corporate actors are now centrally involved in large parts of the welfare system, and when taken together with the ever-expanding reach of other forms of surveillance capitalism, intangible human rights values can be assumed to be worth as much as the shares of a bankrupt corporation.”

- UN Special Rapporteur on Extreme Poverty, A/74/48037, paragraph 64

When government buys into this narrative it often means contracting private companies that are offering tech solutions (both hardware and software) to facilitate this transition towards so-called digital welfare.

Digital technology has created what can be described as a ‘government-industry complex’ that manages and regulates social protection programmes. Some of the concerning features of this ‘government-industry complex’ we have observed so far include:

- poor governance of social protection policies, including closed, exclusionary and opaque decision-making

- limited transparency and accountability of the systems and infrastructure;

- access is tied to a rigid national identification system;

- excessive data collection processing;

- data exploitation by default;

- and multi-purpose and interoperability as the endgame

Unfortunately, we are not seeing this ‘government-industry complex’ slowing down anytime soon. On the contrary, with two global crises linked to one another – the Covid-19 health crisis and the economic crisis it has triggered – more and more people are turning to their state in need of support. Whilst public-private partnerships have always been a cause for concern - even prior to the pandemic – the scrutiny of public-private partnerships will be more important than ever, during and after the Covid-19 crisis.

And some questions will need to be answered: who really shapes our social benefits system – the companies offering the services, the governments buying them, or people’s needs? What are the problems identified and the proposed solutions we are trying to achieve – ensuring that people who need social benefits get them or excluding alleged fraudsters?

How much control and understanding do governments have over the structures they purchase? What do companies get out of it and how does this feed their data exploitative business models? Who sets the parameters of the design? How do we ensure that the systems we create do not contribute to further marginalisation of groups in vulnerable situations? Or that the very delivery does not reinforce patriarchal structures that, in turn, reinforce the gender binary or expect women to remain in care roles?

This is an overview of some of the major companies that have emerged as relevant actors in our global research on the impact that accessing social benefits has on privacy and the enjoyment of fundamental rights.

This is not an exhaustive list. If you have others you have come across reach out to us: [email protected]

IBM

Historically, IBM has always played a role in the provision of computer services to state actors. In the late 19th Century, Herman Hollerith created the Tabulating Machine Company for the US Census Bureau (the company would then be merged with three others to form what is now known as IBM). In 1937, when the US government rolled out Social Security numbers to 26 million American workers, IBM developed a card-punch and tabulating device to allow the government to process this unprecedented amount of data. It was the very same technology that IBM sold to the Nazis a few years later so they could identify Jews, seize their assets, deport and exterminate them.

In 2017, we published a report in which we looked at the role IBM had played in shaping the concept of smart cities. They, in fact, coined the term “smart city” and were a leading actor in the exploitation of data in the public space.

Today, IBM offers a wide range of options for governments across the world wishing to automate their social benefits systems. Their child welfare programme focuses on the need of social workers to collect substantial information on children and their families in order to best address their needs, IBM offers governments platforms for them to handle these datasets.

At the moment, their services appear largely focused on creating systems that facilitate data management and visualisation: they ensure the data is all in one place, stored in a uniformed way and easily sharable to facilitate the decision-making process about children.

These are only the building blocks of IBM’s child-focussed services. In their white paper on child welfare, IBM describes their vision for the future. For them, child welfare will be transformed by cognitive computing, a type of computing that can process natural languages. Combining information from school records, medical information, and police records, for instance, cognitive computing systems will directly make recommendations to social workers about the best solutions for children.

IBM’s services for social workers extend beyond child welfare. They offer governments the promise of “gaining a 360-degree view of each citizen and their specific needs.” In their promotional video IBM talk about how they are working to “transform how [human services organisations around the world] deliver services.” But they also insist that they do it “to make sure that when a government is providing services, it’s doing it without fraud, that it’s benefitting the tax-payers as much as it’s benefitting the people receiving services.” They are thus pushing the narrative that social protections are being abused by fraudsters trying to cheat the system.

This narrative fits the product they are selling. In North Carolina, USA, for instance, they created an analytics system to attempt to detect Medicaid fraud. Interviewed in IBM’s promotional video, Al Delia, the Acting Secretary for North Carolina Health and Human Resources, says of people who are flagged by the system: “We can stop paying them [benefits] the minute we identify them as potential rip-off artists.”

This statement raises a number of concerns, as it suggests that anyone who is suspected to be fraudster, which might not be the case, is automatically being prevented from accessing healthcare benefits without being given a chance to appeal the decision.

North Carolina is not the first state where IBM has worked on Medicaid. As explained by Virginia Eubanks, back in 2006, IBM signed a $1.16 billion contract with an Indiana Governor to lead a consortium of tech companies in order to modernise processes for accessing Medicaid, food-stamps, and cash-assistance programmes.

According to Eubanks, at the time, many applicants saw their applications for benefits denied, for instance if they failed to pick up a phone call or if they sent in a form that the machine could not read. By February 2008, the number of households receiving food-stamps in Delaware County dropped by 7% even though the numbers of applications had increased by 4%. Differently-abled people were primarily affected.

In 2009, the Governor cancelled the contract. Marion Superior Court ruled in the case State of Indiana v. IBM that the state was in breach of contract and had to pay IBM $52million in damages.

IBM’s Cúram, a customizable off-the-shelf IBM software package, was used as the basis for Ontario’s Social Assistance Management System (SAMS), which has been used to automatically generate decisions on eligibility for cash transfers and other benefits. Cúram was also used in welfare programs in Canada, the United States, Germany, Australia and New Zealand.

While the cases we highlighted above were largely based in the US, IBM has an international reach and plan to apply the models they are developing for the US to the rest of the world. On the page dedicated to Social Programmes on their Mexico website, IBM offers similar services to the ones offered on the US websites. The case studies they give as examples of their work are all US-based translated to Spanish.

This suggests a worrying reality where governments across the world all rely on the same Western-based companies with a one-size-fits-all approach to social protection, which may fail to take into account the specificity of the local context.

Mastercard

Using the discourse of inclusive growth and the promise of including ever more people in the market economy, MasterCard promotes a cashless vision of the world and do so by offering humanitarian organisations and governments forms of cashless payment alt: cashless payment options that can be used for the distribution of benefits.

The MasterCard Aid Network for instance offers humanitarian organisations a card system that beneficiaries can use at selected participating merchants. Humanitarian organisations thus retain control over what beneficiaries can purchase and in what quantity.

Back in 2012, MasterCard was involved in a programme that aimed to deliver 2.5 million MasterCard debit cards to welfare beneficiaries in South Africa. The cards were meant to have biometric functionality in order to identify the recipients. The programme was discontinued after the South African Supreme Court ruled that there was a fault in the tender process, before its effects on privacy could be properly assessed.

While cashless systems may sound appealing to governments and humanitarian organisations to ensure the money is received by those who need it, the implication is that poverty comes at the cost of privacy. When every expense someone [alt: you] make is monitored and controlled by a government or an organisation, beneficiaries [alt: you] are effectively trading their [alt: your] dignity and autonomy for the benefits they [alt: you] need to survive.

Sodexo

In the UK, a similar welfare model is in place for some asylum seekers, who have to rely on the ASPEN card for their everyday expenses. Depending on their application status, some are prevented from withdrawing cash, meaning that every single expense they make and their location can be monitored at all times by the UK Home Office.

The ASPEN card is a Visa card, but it is the French company Sodexo that has been contracted to put the system in place and was the main private provider up until early 2020. Sodexo was originally a food service and catering company. Today the company has expanded to facilities, workplace and technical management. In 2017 it was included in the Global 500 ranking.

With sections of their website dedicated to defence, government, healthcare, justice and schools, government bodies appear to be their primary target. Their narrative is clear: Sodexo is the company that governments should trust in an era of “shrinking budgets” to “improve their employees’ productivity” while “lowering taxpayer costs.”

Sodexo works internationally, in Europe, North America, the Middle East, Asia and Latin America. A key part of Sodexo’s work involves running prisons across the world. In Chile for instance, their programme involves getting inmates to work for Sodexo.

In Venezuela, Sodexo produces cards for employers who offer benefits to their employees, as required by the law. Food and leisure are among the cards handed to employees so they can spend money in selected shops and venues. They also offer a platform so that employers can pay directly for the childcare of their employees.

The hidden players: Credit scoring agencies

Credit scoring agencies also play a role in the automation of welfare services. The data they collect can be used by government bodies in an attempt to detect alleged fraud. The original purpose of credit scoring agencies was to collect as much information as possible about an individual from different sources in order to assess whether a person should be granted a loan. But this information is now used by governments in an attempt to predict who may be committing fraud. Back in 2010, the BBC reported that Experian had a project to detect fraud in housing benefit and council benefit claims that would be rolled out to 380 local authorities in the UK.

The London Counter Fraud Hub: what we uncovered

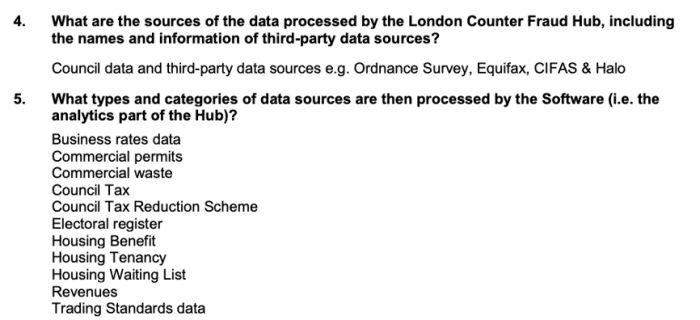

Nearly ten years later, we filed a series of FOI requests to four London councils (Ealing, Islington, Camden, Croydon) concerning the London Counter Fraud Hub, a system designed by the Chartered Institute of Public finance & Accountancy (CIPFA) in order to detect fraud in applications for the council tax single person discount. The system was meant to process large amounts of data to identify fraudsters.

The system was a cause of great concerns when it was first revealed in the media. With more and more dicussions on algorithmic bias and the revelations that the system had a 20% failure rate, many feared they would see their benefits cut unfairly.

Camden and Islington wrote to explain to us that Ealing had been in charge of the contract and they had therefore none or limited information on the contract respectively, despite being pilot authorities.

Islington Council initially wrote that Ealing Council were preparing the response to our FOI request, which would then be shared with the other pilot authorities before replying to us. Camden Council wrote that the questions should be directed to the London Borough of Ealing as they were the Lead Authority on the contract.

Croydon and Ealing gave identical responses and revealed that the system was used over a trial period between August 2017 and October 2018. When we asked about the source of the data that was processed, Equifax was named as one of the third-party data sources used for this system.

The full extent of credit scoring agencies, and public-private sector partnerships operating to further develop a digital welfare state in the UK, is unknown. In fact, the lack of transparency regarding this very contract should be worrying to Londoners. While the London Counter Fraud Hub appears to no longer be in use, the fact that the council was sharing data collected from their own residents - as well as data they had obtained from a credit agency - with a private company, all to attempt to identify whether people live alone or not should raise important questions about the councils’ attitude towards consent. Moreover, the fact that two of the councils admitted they needed to rely on the third to give us an answer, and one (Camden) requested that we file a separate FOI request to obtain an answer, also raises questions about accountability and the information that even government may not have when they sign deals with private companies.

The screenshot below is an excerpt from the responses we got. You can find all the responses to our FOI requests in the documents available at the bottom of this page.

London councils may still have to face more consequences from their attempted partnership with CIPFA. An article published in July 2020 in the MJ, a publication for council chief executives, revealed that CIPFA was preparing a potential legal action against the council of Ealing over a contract dispute.

We asked the council of Ealing why they had not pursue the roll out of the London Counter Fraud Hub. They replied:

The London Counter Fraud Hub did not progress to a full operational stage after the initial pilot phase ended due to the lack of sign up from other local authorities.

We have seen similar instances of the involvement of data brokers in other countries too. Our partner Fundación Karisma in Colombia revealed the role of Experian, an Irish-domiciled multinational consumer credit reporting company. Experian is no stranger. We have come across them in our work exposing and challenging the hidden data ecosystem as a global actor that describes itself as “Unlocking the power of data to help create a better tomorrow”. They have developed from credit and consumer reporting to also offer marketing data services.

Fundación Karisma’s research into the Colombia’ System of Identification of Social Program Beneficiaries (SISBÉN) which produces a household vulnerability index that is used to identify the beneficiaries of social assistance programmes in Colombia. They exposed how, as they were trying to modify the algorithm of the (SISBÉN), the Department of National Planning (DNP) had decided to include a prediction of "capacity to generate income" in an attempt to reduce the number of people who could be eligible for social benefits, and an exchange system was created with 34 public and private databases to verify the data that was being reported by applicants.

It emerged that in August 2018, the DNP signed an agreement with Experian to use their credit rating database. The agreement included the use of two services provided by Experian. The first was through a product called Quanto, which would enable the estimation of the income levels of a person. The second one was that Experian would give the DNP access to information about individuals registered in SISBÉN to cross check the two sets of data and find inconsistencies with information. Fundación Karisma reported that, in exchange, Experian would have access to the data of people registered in SISBÉN to develop applications and others services to be used by banking institutions in Colombia.

The data Experian provided included: identification data such as gender and age, credit data such as the number of credit cards and percentage of use, contract data with telecommunication companies regarding mobile lines and the value of devices. Experian made it clear that it could not verify or vouch for the accuracy and integrity of the data, given that it was collected from third-parties.

Conclusion – Austerity, a juicy business for companies benefitting from the outsourcing of the welfare state

The companies we presented in this overview are very diverse in their business models. Yet all have a narrative in common: in times of austerity, when budgets are tight, technology should be used to make welfare systems more efficient by reducing by default the number of people who receive benefits - through the hunt of alleged fraudsters. While the companies we reviewed are all European or American, they seek to impose this narrative to the rest of the world.

Companies cannot hide behind pretences of intellectual property or commercial secrets. Transparency about the role of the private sector in the design and deployment of welfare programmes is the minimum. Companies should be pro-actively providing information about the solutions they are providing to governments around the world, including how they work, and are deployed. There should also be more oversight and accountability about the bidding and procurement process, and how the systems are designed in collaboration, if at all, with governments.

Furthermore, as part of their due diligence and accountability obligations governments and companies must undertake in-depth data protection and human rights impact assessments to explore issues of privacy and discrimination in particular.

In our Framework for Researching Social Benefits, we have attempted to debunk the myth that welfare systems are riddled with fraud. We think that an efficient welfare system is one that is efficient at delivering benefits. Understanding the local context and realities of people who are receiving benefits is key and these “one size fits all” models that Western companies are trying to apply across the word is benefitting anyone but themselves.

The consequences of this model for populations in vulnerable situations is real: the cashless systems we see being promoted mean that poverty or asylum-seeking come with the additional burden of having to renounce one’s fundamental right to privacy. People have to submit themselves to the preying eyes of the state and the companies they contract in order to obtain the money they need to survive.

Not only are those systems disproportionally more likely to affect marginalised groups, as demonstrated by Virginia Eubanks in her book Automating Inequality, they also often contribute to reinforcing patriarchal systems of oppression. Indeed, many benefits systems rely on the assumption of traditional family structures. As we see, for instance, systems that aim at predicting the chances that children will fall victim of abuse, are we taking the risks that families that differ from traditional norms will be more exposed to unjustified control?

Obtaining – or failing to obtain – benefits can also be what stands in the way of a person’s independence from an abusive partner. In order to prove that one is not committing fraud, one often needs to comply with a certain idea of what “being single” or what “being a mother” looks like.

This race to detect fraud also means that people, who may have been wrongly identified as fraudsters, risk losing benefits. The London Counter Fraud Hub for instance was known to have a 20% error rate. In many cases, this will result in destitution for those who benefits are withdrawn.

At Privacy International, we will be dedicating ourselves to tell the stories of those affected by the roll-out of the digital welfare state, and in particular those who are the mercy of those contracts with companies that benefit from austerity measures. And we will be there to challenge governments who choose to protect the interest of companies over the dignity of their citizens.