Privacy and Sexual and Reproductive Health in the Post-Roe world

Concerning news from the US about the restriction of the right to abortion have made many reconsider their engagement with platforms processing health data. Here, we provide an overview of our research findings on the intersection of privacy and sexual and reproductive health.

- Users of period-tracking apps and other products and/or services processing health data relating to abortion care are understandably alarmed by the recent developments in the US

- It’s not the first time that the power of data is harnessed in order to obstruct the exercise of reproductive rights and access to related services: data-exploitative tactics are ordinarily deployed by the opposition to abortion

- The burden should be on private companies to ensure that their users are informed of all the implications of using their product or service, and that their data is adequately protected

In the wake of the recent news of the US Supreme Court’s decision to overturn the ruling of Roe v Wade in its ruling in Dobbs v Jackson Women's Health Organization, headlines have been dominated by conversations around privacy and fears of how the criminalisation of abortion care and surveillance by law enforcement will play out in a tech driven world.

This discussion is increasingly important as governments move towards digitising their healthcare systems and as more individuals choose to manage their health through digital means. This is certainly the case for reproductive healthcare with the proliferation of period-tracking apps providing users insights into their menstrual cycle, as a contraceptive tool or to assist with fertility tracking. However, the extent to which companies and app developers are upholding privacy requirements and whether users’ data, whether knowingly or unknowingly, is being exploited and shared with third parties, including law enforcement, is of deep concern - especially given the current context as the criminalisation of abortion will further drive people online to covertly manage their reproductive health, including access to abortion care.

In 2019-2020, Privacy International (PI), along with our global partners, began working on exposing the consequences of the digitalisation of health on individual’s privacy. Since then, we’ve been exploring extensive data collection and data-sharing practices by period-tracking apps, as well as other ways in which digital platforms risk being exploited to restrict access to, or mislead individuals’ seeking reproductive healthcare.

Information concerning an individual’s health constitutes a key element of an individual’s right to privacy. In an increasing digital and tech driven era, the industry of collecting and using health-related data, frequently without the individual’s consent or awareness and the growing number of data breaches are of enormous concern. Against this backdrop, the UN Special Rapporteur on the Right to Privacy published a report on the protection and use of health-related data in 2019. Within this context there is increasing awareness on the sensitive nature of reproductive health data including sexual activity or sexual orientation and the need for additional safeguards and protections.

In this piece, PI reflects on the findings of previous research conducted by PI and other experts, and the consequences of these findings in the current context of the criminalisation of abortion as a result of the overturning of Roe v Wade.

Period-Tracking Apps

Period-tracking apps, by default, collate mass amounts of personal and sensitive information from its users. They collect information about your health, your sex life, your mood and more, all in exchange for predicting what day of the month you’re most fertile or the date of your next period. Pregnancy-tracking apps that allow pregnant people to input their symptoms and offer advice and guidance throughout their pregnancy, similarly collect health data and pose the same risks to an individual’s privacy.

Concerns about such applications have been the focus of extensive research by CSOs and investigative journalists for many years, and the concerns are well-documented.

Data collection

In 2020, PI conducted research on period-tracking apps by way of sending Data Subject Access Requests (DSARs) under the General Data Protection Regulation (GDPR)– see our guide on how to write them here – to Clue by Biowink, Flo by Flo Health, Maya by PlackalTech, MIA by Femhealth Technologies Limited, and Oky by UNICEF. Out of the five apps PI targeted, only two apps provided a detailed response and breakdown of data, one app responded but did not give provide PI with the data, one never responded, and one refused to let PI publish the data.

The apps that did respond provided PI with multiple pages detailing the users’ sensitive health data that they had entered into the app. This included data such as the user’s diet for example, whether they drank alcohol; their sexual activity and whether they experienced pain during intercourse; details of various health conditions such as whether they suffered from cystitis and if so, how often; and whether they were on any medication such as birth control. The extent to which the vast amounts of personal health data collated is necessary for the purpose of predicting the users next period or fertility window is questionable on medical grounds alone. While it is important to note that entering such data is at the users’ own discretion, there are concerns regarding information collected and stored by the apps, often unbeknownst to the user, concerning their lifestyle, health and activities. PI’s findings highlighted the difference in data collected through information that was knowingly and voluntary inputted by the user, compared with information, i.e. metadata, that was collected unknowingly and without clear transparency. Data that was collected unknowingly included sensitive information tied to the user’s location.

It’s not just health data that’s at stake – other types of data can also be processed by apps. Location data is information is connected to our user ID, our device ID but also to our location. PI’s research conveyed that the data imputed by the user was identifiable by location. We had to redact our location in order to protect the privacy of our researcher, but it was precise enough to identify the borough and administrative districts of where they were located.

Data-storage

There are further ambiguities and nuances about how data is stored by period-tracking apps. The app is not transparent and does not actively inform the user that there is a difference between data being stored locally, remaining on the user’s personal device, or whether it is stored on a server. If the data is stored on a server as opposed to the device, this can have consequences on how their data is used and potentially exploited.

Essentially, if an individual inputs their data into a period-tracking app which stores information on the server, this means that the deletion of the app does not equal erasure of data. Deleting the app does not guarantee that one’s data will be automatically erased but it is likely to remain in the company’s server. This means that unless the user expressly requests for their data to be deleted, the app will retain the data for a period of time after deletion of the app and can continue to be shared and vulnerable to exploitation.

Data-sharing

Data-sharing with the private sector

There are many ways and means by which data collected by period-tracking apps can be shared with third parties, and data stored on the app’s server seamlessly assists with the transition of mass amounts of personal data being shared with big corporations. Companies developing period-tracking apps may present as offering transparent and efficient means for users to keep on top of their reproductive health. Even if that is the intention ultimately, they are still companies whose continued existence relies on making a profit. One way in which companies make their profit from apps that are marketed as “free” is to collect, share or sell user’s personal data to third companies for advertising purposes. For example, the data of pregnant people is particularly valuable to advertisers as expecting parents are consumers who are likely to change to their purchasing habits. These concerns have been borne out in practice. In the UK, company Bounty was fined £400,000 by the UK’s data protection authority for illegally sharing the personal information of mums and babies as part of its services as a “data broker”.

In 2019, PI targeted five popular period-tracking apps to uncover the extent to which period-tracking app companies were engaging in data-sharing pratices. Using Privacy International's data interception environment, we conducted a dynamic analysis of some of the most popular apps. This research uncovered that many apps were sharing data with third-party entities not disclosed in the apps’ privacy policies. PI found that, all of the apps reviewed, two were conducting what could be described as extensive data-sharing with Facebook. The data shared with Facebook ranged from the user’s mood and health symptoms to the use of contraception and the purposes for which the user was using the app. Notably, information typed in under the “diary” function of one of the apps was shared in its entirety with Facebook.

Data-sharing with law enforcement

The above situation is even further complicated when law enforcement is involved, as data protection laws - where they exist - generally exclude law enforcement processing from their scope of application. Therefore, in certain circumstances, and depending on the legal framework in place, companies can be compelled by law enforcement bodies or courts to hand over personal data for criminal investigation purposes.

As recent experience from the US shows, different period-tracking apps apply different approaches to law enforcement requests: while some refuse to disclose data in the absence of a warrant legally compelling them to do so, others have previously offered to disclose data to law enforcement voluntarily. There is also a lack of transparency regarding the requests that these companies face, as they often face gag clauses in connection with law enforcement requests and are therefore unable to report these to the public or the relevant data subject until a certain amount of time has elapsed.

However, that is not the only risk. Without sufficient internal checks and balances, it is possible for malicious actors to successfully pose as law enforcement agents. Earlier this year, it was reported that Apple and Meta mistakenly disclosed data to a forged law enforcement request.

Data breaches

Another incident in which data can be shared with third parties - albeit unwillingly - is through a data breach. In contrast to the deliberate act of companies “data-sharing”, a “data breach” is accidental. Although PI’s 2019 research did not identify any incidents of publicly disclosed data breaches of those companies subject to our research, it is still important to highlight that this sensitive personal information is also potentially vulnerable to attacks.

Data breaches occur regularly both to governments and companies on account of security failures, which themselves lead to data leaks. In light of the recent restrictions to abortion rights in the United States, it is likely that data disclosing abortion care will become of increased interest and may become more susceptible to external attacks. In the past, hackers have broken into databases containing the details of women having sought abortion care. In a recent example from the US, the data of 400,000 Planned Parenthood users was stolen in a ransomware attack.

Beyond Period-Tracking Apps and the Wider Ecosystem of Data

It is important to note that the exploitation of data derived from period-tracking apps is not the only way in which data has been exploited. We are increasingly seeing data being exploited to delay and curtail access to reproductive healthcare. With the criminalisation of abortion care across US states, we are likely to see these tactics being deployed on a more sophisticated and widespread level to crack down on access to medically accurate reproductive health information. Furthermore, these tactics could be weaponised to criminalise pregnant people seeking reproductive healthcare and access to safe abortion care.

In 2020, PI documented 10 data exploitative tactics anti-abortion groups used to limit people’s access to reproductive healthcare. As well as research conducted by PI’s partner organisations into data-exploitative tactics in their jurisdictions. Tactics included targeting advertising using geo-fencing, as well as data-collection tactics deployed by crisis pregnancy centres using online chat services.

Location data, advertisers and data brokers

Location data doesn’t need to be associated to a person by name to raise privacy concerns. Often, location data can be associated to an Ad ID, an identifier generated by the phone’s operating system that can be used by advertisers in apps and websites to uniquely identify a user online an offer services such as targeted advertising. In instances where location data is tied to an identifier, it can become incriminating if associated with a user in a state where abortion has been criminalised. You can find tips on how to reset your Ad ID here.

Further, location data placing individuals at abortion clinics is known to be sold by data brokers to third-parties. Data broker Safegraph was recently reported to have sold location datasets including more than 600 Planned Parenthood locations in the United States, some of which provided abortion services. While the location data in question was reportedly anonymised, anonymisation is not always futureproof.

However, the sharing of location data can be harmful even if not tied to a particular person. PI has identified targeted advertising of scientifically dubious health information as a tactic deployed by those opposing abortion. By way of example, ads about "abortion pill reversal" - which we spotted as early as 2020 - have continued to make the rounds on social media platforms. That is not all. Targeted advertising, some of it enabled through geo-fencing, reportedly allowed advertisers to target people going to abortion clinics. Geo-fencing is the creation of a virtual boundary around an area that allows software to trigger a response or alert when a mobile phone enters or leaves an area. When put to the service of the opposition to abortion, geo-fencing can result in visitors of abortion clinics - many of them in a vulnerable position - being placed in the difficult situation of dealing with potentially unwelcome or disturbing advertising.

Crisis-Pregnancy Centres (CPCs)

A crisis pregnancy refers to an unwanted pregnancy. "CPC" is the term used to describe an organisation that holds itself out as an entity designed to assist pregnant people with a crisis pregnancy, which purportedly sometimes masquerades as a licensed medical facility. CPCs have been criticised for providing those seeking medical help with false and misleading information.

CPCs are often exempt from the regulatory oversight that healthcare facilities are typically subject to. In the US, for example, the Health Insurance Portability and Accountability Act (HIPAA) which sets national standards for the regulation and protection of health data, does not cover CPCs as they are not “covered entities” within the meaning of HIPAA.

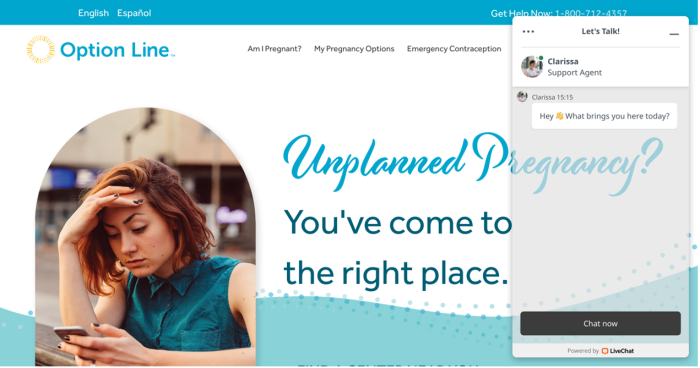

PI has previously voiced concerns in relation to the data management practices and software used by crisis-pregnancy centres. In 2020, PI found that many anti-abortion organisations were using technology to collect personal information from pregnant people that used their services. These include using chat services hosted on non-encrypted platforms such as Facebook chat. These chat services would be used to collect personal data to develop detailed dossiers about a pregnant person’s health. Other chat services such as Option Line will ask for demographic information, location information, as well as if someone is considering an abortion.

Pregnancy-related health services, including pre and post-natal care, assistance during childbirth, and emergency obstetric care

As part of wider developments in the digital health sector, there has also been a notable increase in initiatives focusing on the delivery of reproductive, maternal, newborn and child health (RMNCH) services. As with period-tracking apps, we are seeing the absence of human rights and privacy considerations in the implementation of RMNCH digital health services.

RMNCH digital health services include digital information registries facilitating scheduling through SMS; remote access to care and counselling; telemedicine; health workers using a mobile phone to track pregnant people throughout their pregnancy or a child over their immunisation cycle, as well as the use of sensors and wearable devices. Together these initiatives are creating a wider digital infrastructure to monitor and track those accessing pregnancy-related health services, including pre- and post-natal care, assistance during child-birth, and emergency obstetric care. While the intention will be to tackle ongoing concerns such as maternal and child mortality, without the necessary safeguards these tools have the potential to create risks.

The Mother and Child Tracking System (MCTS), as documented by the Centre for Internet and Society (CIS), was an Indian government initiative developed in 2009 to collect data on maternal and child health. Between 2011 and 2018, 120 million pregnant people and 111 million children had been registered on the MCTS. The central database stores personal data collected on each visit, from conception to 42 days postpartum. The records of each mother and child are tracked through a Maternal Health Card, which contains a 16-digit unique identification number, containing the codes on the state, district, block, health centre, pregnant person and/or child code and serial number. At its inception, the purpose of the Mother and Child Tracking System was to achieve health benefits for pregnant women. However, the system has now expanded to facilitate the delivery of cash assistance for expecting mothers, and is in the process of being integrated with Aadhaar, the Indian ID system. This example is illustrative of the potential for mission creep in data-intensive initiatives.

More visible ill-intentioned systems have emerged across the globe as Poland announced in 2021 that it would set up a registry to monitor instances of miscarriages.

As with users of period-tracking apps, beneficiaries of RMNCH services are vulnerable to corporate exploitation of personal data. RMNCH digital services also collate mass amounts of sensitive health data and location tracking of patients. The processing of mass amounts personal data, within the current context of criminalisation of abortion, raises concerns of data being misused for surveillance purposes and data-sharing without the individual’s consent.

Furthermore, these digital health initiatives are operating within a complex ecosystem of public and private actors with competing interests. As with period tracking-apps, this raises concerns regarding the intentions of private sector corporations handling sensitive health data when their main purpose is to make a profit.

Where does all of this leave users?

The primary responsibility for mitigating the risk of disclosures of personal information should lie with the companies designing the apps and/or products which collect health data, as they should strive to protect the privacy of their users.

Failing that, there are a number of measures that can be taken by individuals to ensure privacy when accessing reproductive healthcare including abortion care online.

Deleting apps

Advice has been circulating on social media urging individuals to delete their period-tracking apps immediately. Of course, anyone who feels unsafe using period-tracking apps as a result of Roe v Wade should take steps to limit their engagement with these apps at the very least. However, erasing the app and erasing data are two different things. Deleting the app means that the app will no longer continue to collect any further data from the user. This only applies from the point of app deletion onwards and therefore does not apply to the data that may have been entered into the app before it was deleted.

In many circumstances, users may need to engage directly with the company to access their data, amend it or delete it.

For example, Clue explicitly provides that to obtain complete deletion, users need to email. Similarly, Flo asks users to email directly in order to exercise their privacy rights, which includes erasure.

Conclusion

In the wake of the current news putting period-tracking apps in the spotlight we are seeing companies and app developers push incognito and anonymous modes however, this is not enough. A privacy-by design approach means not treating privacy as an after afterthought and adapting the product as much as possible to ensure that:

- It collects only the data that it strictly needs;

- There are safeguards to prevent that data being passed on to third parties, whether deliberately or inadvertently;

- Easily accessible, comprehensive, transparent and understandable privacy policies are made available to the users

In the current context, implementing privacy by design approaches will not only protect the privacy of users, but provide a competitive advantage. We are seeing a demographic of consumers that are increasingly concerned about their privacy and feel the need to protect themselves in a world where reproductive healthcare will be subject to increasing surveillance and restrictions.