Creating content in the gig economy: a risky business

The rise of the gig-economy, a way of working relying on short term contracts and temporary jobs rather than on an employed workforce, has enabled the growth of a number of companies over the last few years. But without the rights that comes with full employment, gig economy workers today don't have access to essential protections.

The rise of the gig-economy, a way of working relying on short term contracts and temporary jobs rather than on an employed workforce, has enabled the growth of a number of companies over the last few years. But without the rights that comes with full employment, gig economy workers today don't have access to essential protections.

In 2021, PI worked with ACDU and Worker Info Exchange to shed a light on the power imbalance between workers and gig economy platforms, exposing how workers find themselves having to deal more and more with sudden policy changes, biased technologies like facial recognition locking them out and arbitrary suspensions they can hardly challenge. In this second part of our series, we're looking at a different kind of workers who face a set of very uncannily similar issues: content creators.

Content creators include any creator that produce content for a digital platform and rely on (or aim at making) this activity as their primary source of income. That includes Livestreamers on Twitch, video creators on Youtube and TikTok but also sex-workers on OnlyFans or Pornhub. All of them face the same power imbalance that gig-economy workers have been denouncing, operating on massive platforms that have become inescapable and forced to deal with decisions these platforms make without involving them.

This translates into creators getting their account suspended or banned, leading to an abrupt cut in their revenues, changes to algorithms that massively influence whether they are promoted or demoted, change in policy, lack of transparency on how these algorithms works etc.

Content creators also face increased security and privacy risks by being constantly online to make a living. These risks can lead to real life harms, either through targeted attacks on them or because of broader attacks leading to leaks, something platforms do not seem to always take seriously.

At PI we believe gig economy workers and content creators are at the forefront of a ramping problem: algorithmic management. Too often dehumanising, motivated by economic gains and rapid technical innovations, algorithmic management relies on the collection and exploitation of data by, usually, big companies to improve work streams. In reality, it exacerbates a number of critical issues, from the fallibility and opacity of algorithms deployed to discrimination to the dehumanisation of work.

In order to better understand the risks and challenges faced by content creators, we ran a survey to get feedback from people in this community. With their contributions, we could better understand general and specific issues that creators face in their relationship with platforms. We also were able to explore the security and privacy risks they are confronted with and, together with their contributions, identify solutions. This piece details our findings as well as recommendations for platforms and suggestions for creators.

Security, Privacy and relationship with platforms: what we learned

Methodology

This survey was drafted by Privacy International. We consulted with organisations working with content creators seeking their review of the survey, and their assistance with dissemination. We identified a number of key individuals and organisations with relevant interests and asked for their guidance. We thank in particular Fairtube, Youtubers Union and United Voice of the World for their assistance and insights.

The survey was designed to gain a better understanding of content creators challenges and concerns around two main areas: Privacy and security on one hand, relationship with platforms on the other hand.

The questions of the survey were designed to be as neutral as possible and to encourage respondents to challenge PI's assumptions. On both areas it provided suggestions of solutions to assess how supported those would be by the affected communities.

Dissemination was done on PI's and supporters' social media accounts as well as through our newsletter.

The survey was launched in May 2022 at this address. At the date of publishing this piece, 71 people completed the survey. Of these, 31 reported to make all or most of their income through content creation. We will refer to those as professionals in this piece. The survey stayed until September 2023 to monitor further results.

Security/privacy

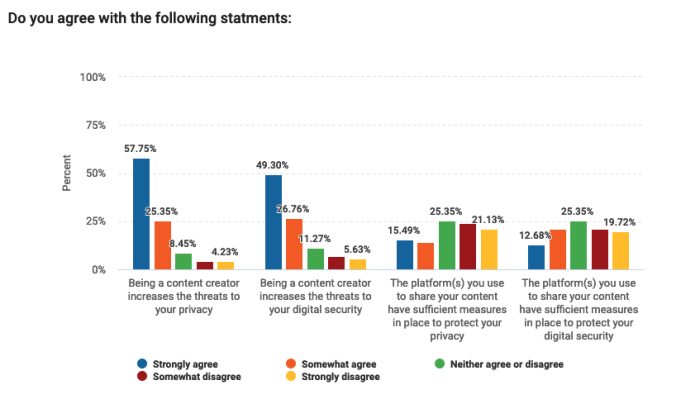

More often than not content creators fundamentally need to expose parts of their identity by putting their face, voice or body out for public or semi-public display. Consequently, they are more likely to be faced with the privacy and security risks that people face online. In our survey more than 4 out of 5 content creators agreed that this activity increases the threats to their privacy and more than 3 out of 4 agreed that it increased the threats to their digital security. This number even goes up to more than 4 out 5 for professionals. This confirms the correlation between public visibility and security risks.

Yet, content creators do not feel that platforms protect them sufficiently. Only less than 30% of respondents agreed that platforms have sufficient measures in place to protect their digital security and privacy.

This lack of confidence in the platforms' ability to safeguard their privacy and security is understandable. Platforms have failed to maintain good standards on numerous occasions. Twitch, for example, had a reportedly massive data breach in October 2021 exposing the revenues of its streamers. OnlyFans content was reported to be leaked in February 2020 suddenly making public terabytes of data meant to be only accessible through subscription. Youtube, Instagram and TikTok also had alleged data breaches, exposing logins and other personal data. The responses from content creators could illustrate the consequences of these failings.

Most of the content creators (70%) have taken steps to improve their digital security and privacy as part of their activities. This number increases to 80% when only considering professionals. When asked what kind of measures they had taken, the top five actions were:

- Use of 2 Factor Authentication - 23

- Use of separate email addresses - 22

- Use of VPN - 19

- Fake name/identity/alias for their professional account - 7

- Removal of Metadata/EXIF from photos/videos - 7

PI used these results to create short privacy and security tips videos. You can find [those videos here](link to videos) as well as videos from content creators explaining why they took these steps. While these suggestions are only indicative and might not always work, they should still be useful to anyone interested in improving their digital hygiene, content creator or not.

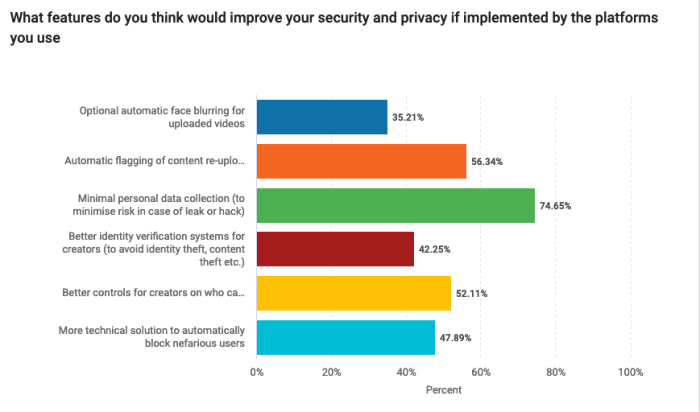

The last question in this section looked at potential solutions that platforms could implement to strengthen the privacy and security of their content creators. While most suggestions put forward by PI generally received support from more than 50% of respondents, one was particularly popular: 3/4 of the content creators surveyed agreed that "minimal personal data collection (to minimise risk in case of leak or hack)" would improve security and privacy. This number increases to more than 4/5 when considering only professionals.

Two PI suggestions received less support from respondents: optional automatic face blurring for uploaded videos (this was mostly aimed at sex workers but barely received more support from this sub-group with 42% of respondents agreeing) and better identity verification systems.

Relationship with Platforms

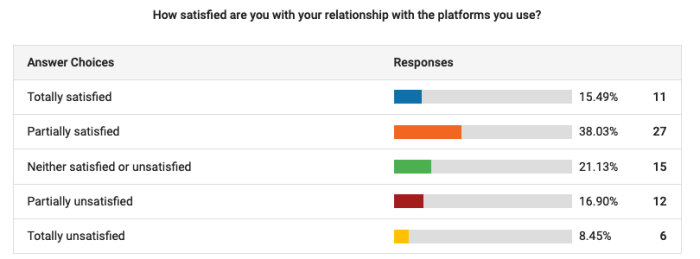

Contrary to the consistency and strength of the responses on the security and privacy aspects, satisfaction with the platforms shows a lot more variations. This can partially be explained by the diversity of the platforms where respondents are active: respondents added 16 more platforms to the 19 platforms the survey listed.

While about half of the respondents are mostly happy with their relationship with platforms (slightly less in the case of professionals), at least a quarter of them were at least somewhat unsatisfied. Amongst professionals, the dissatisfaction rises to over a third of respondents from that category. We can perhaps deduce that the more a content creator invests time and is tightly connected with a platform, the more likely they are to be dissatisfied or to voice their issues.

This is potentially due do the stakes being higher for people who make substantial amounts of their income through these platforms. A decision to suspend their account or demote their content will have more dramatic impacts on their lives than people who don't rely as much on the platform to make a living.

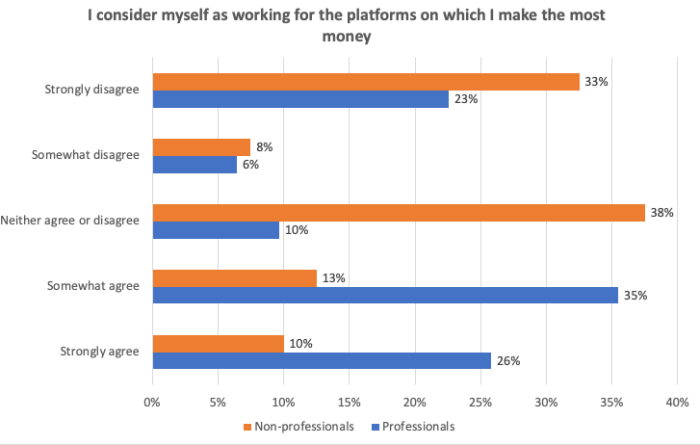

When asked whether they considered themselves as working for the platform, more than 60% of professional content creators at least somewhat agreed while 29% at least somewhat disagreed. Understandably, this sentiment is not reflected by people who don't make most of their income through platforms and most of them disagreed with that statement.

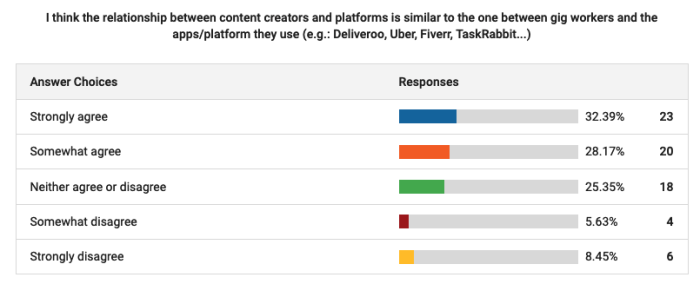

60% of respondents agreed that the relationship between content creators and platforms is similar to the one between gig workers and the apps/platform they use (e.g.: Deliveroo, Uber, Fiverr, TaskRabbit...). Only 8.45% strongly disagreed. This feeling is stronger for professionals (more than 70% agree and only 13% strongly disagree).

These results could partially be explained by the diverse number of platforms that exists for adult content creators. While platforms like OnlyFans are dominant, there is a large number of other platforms that specialise in specific types of content, allowing sex workers to share their content on multiple platforms and not feel too affiliated with one platform. This is not the case for live-streamers (such as gamers on Twitch) as competition is almost inexistent, meaning content creators likely have a unique relationship with a platforms, increasing the chance that they consider themselves as working for it.

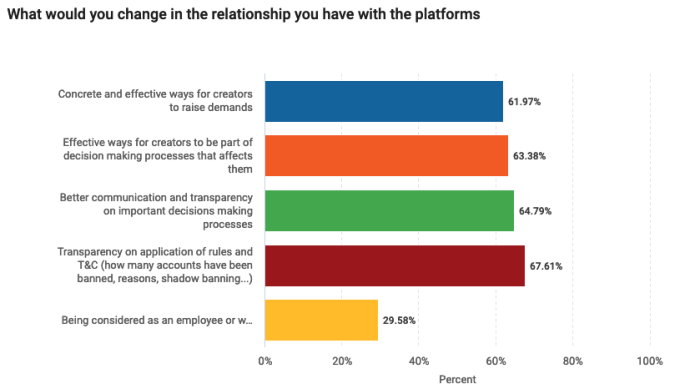

The content creators surveyed felt quite positively about PI's suggestion to improve their relations with platforms. In particular, more than 2/3 of the respondents agreed that they would like:

- more transparency around the application of rules and Terms & Conditions (how many accounts have been banned, reasons, shadow banning...);

- Effective ways for creators to be part of decision making processes that affects them;

- Better communication and transparency on important decision-making processes.

Only 1/3 of respondents indicated that they would like to be considered as an employee or worker of the platforms (i.e. to have access to insurance, sick leave support, minimum salary per month under certain conditions, etc.). This seems to support the idea that content creators appreciate and value the freedom they have in their relationship with platforms.

Key takeaways

A few interesting things transpired from this work.

1. Security and privacy matter for content creators, and platforms must take them more seriously

Content creators strongly agree that using platforms for their work puts their privacy and security at risk. While this may feel counter-intuitive for people who reveal themselves online, the responses shows that some of the concerns are related to their other online activities and real life. Fundamentally, it's not as much the fact they create content that creates risks, but rather it's their interactions with platforms and how it leaks into their personal life.

For example, 1/3 of the respondents said they were using a VPN. Despite the fact that this tool might offer limited protection -in the sense that it will not conceal the name or email address a content creator uses or prevent people from recognising their face if they have spotted it in a video- it would nevertheless help, by hiding their IP address. This prevents the platform or services a content creator uses from being able to tie their content creator account with their other online activities, including the ones tied to their real identity.

This can be particularly useful on social media where adult content creators might choose to maintain separate personal and professional accounts, and want to avoid 'data contamination' (such as the professional account being recommended to family members or friends).

2. The more money content creators make on a platform, the more likely they are to question their relationship and show concerns

Content creators in situations of dependency toward a platform where they make substantial amounts of their income have more at stake in decisions made by the platform.

Additionally, platforms tend to curate their relationships with the top earners but do not necessarily pay attention to smaller, yet significant, channels. For example, it appears that in order to make an average US income on Twitch a streamer needs to be in the top 0.015% of all streamers. As a result, the community appears to be very much aware that their status depends on their success and that a decrease in audience will automatically lead to a reduced interest by the platform.

However, it is in all content creators' interest to improve conditions with the platforms if they aspire to make more money on it. This was reflected in the support PI's suggested recommendations received, in particular with regard to transparency around the application of rules and transparency around the decision-making processes.

3. Content creators don't necessarily consider themselves as working for the platforms but tend to consider their relationships with platforms similar to gig-economy workers

Comparing content creators and gig-economy workers was the assumption that we wanted to test the most. While the results are hindered by the somewhat limited number of responses, it seems clear that content creators surveyed agree with this vision.

This framing allow us to understand and view the relationship under a different light. First, it reinforces the idea that there is a huge power imbalance between these new types of workers and the platforms they work for, ranging from the opacity about decision making process to the devastating consequences that a change in an algorithm or a policy can have on them. Second, it enables us to think more broadly about these new forms of work and the importance of challenging algorithmic management and automated decision making in contexts where people's livelihood are on the line.

It's easy to think algorithmic management and automated decision making processes (such as work attribution or content recommendations) only affect a very specific kind of workers, but the trend to dataify work is not limited to those sectors. PI's work on remote workplace surveillance and Office 365 exposed how productivity seems to be more and more defined through data automatically collected and processed to offer metrics used for decision making.

What platforms must do

Whether they like it or not, platforms now play a role nearly equivalent to those of an employer for some people. A policy change they make or a tweak to their algorithm can have an impact on people's real life. Being an online content creator is an increasingly attracting activity among younger populations. In the US and the UK, a survey of 3000 kids found that being a YouTube star was a more sought-after profession than being an astronaut. If such careers keep being pursued, platforms have a responsibility to take the privacy and security of content creators seriously. They must also question their decision-making processes, communication and payment practice towards what essentially constitute their workforce.

Based on the results of the survey and on PI's Data Exploitation principles, we encourage platforms to:

Regarding Security and Privacy:

- Adhere to data minimisation principles and limit their collection and processing of personal data

- Offer adequate tools to content creators to protect their digital and real identities

Regarding algorithmic management and relationship with content creators

- Increase transparency regarding their algorithmic and automated decision making systems by listing where such systems are used, what key variables they rely on and what logic they deploy

- Involve content creators in decision making processes that will affect them by notifying them of major upcoming changes to algorithms and offering opportunities for feedback and consultative process

- Maintain adequate communication channels with content creators to enable dialogue around important decisions and offer adequate mechanisms for disputes resolution or ways for appealing decisions

- Impose human review of important decisions before the decision is taken in particular regarding account suspension or decisions affecting creators' income