UK MPs Asleep at the Wheel as Facial Recognition Technology Spells The End of Privacy in Public

In June 2023, PI conducted a survey of UK MPs through YouGov, which highlighted their startling lack of knowledge of the use of facial recognition technology (FRT) in their own constituencies, inspiring our new campaign about 'The End of Privacy in Public'.

TAKE ACTION TO STOP THE END OF PRIVACY IN PUBLIC

1. Introduction

The use of facial recognition technology (FRT) by law enforcement and private companies in public spaces throughout the UK is on the rise. In August 2023, the government announced that it is looking to expand its use of FRT, which it considers “an increasingly important capability for law enforcement and the Home Office”. The indiscriminate use of this dystopian biometric technology to identify individuals in public spaces is a form of mass surveillance that threatens human rights and is often carried out without the knowledge or consent of individuals whose sensitive biometric data is being harvested.

Not only does FRT seriously infringe upon the right to privacy, but the use of this highly intrusive technology at protests undermines other fundamental rights such as the right to peaceful assembly and the right to freedom of expression. In the UK, this is taking place within a democratic vacuum without any specific law restricting its use in public spaces.

Outside of the spotlight of public and political scrutiny, the police have been trialling the use of FRT across the UK for several years. More recently in May 2023, the Metropolitan Police (the Met) was accused of using King Charles' coronation to stage the biggest live facial recognition operation in British history. In July 2023, it was reported that the Minister for Policing, Chris Philip, and Senior Home Office Officials held a closed door meeting with Facewatch, a company responsible for providing FRT throughout the private sector, particularly in retail spaces. Minutes of the meeting made the concerning revelation that the government minister appears to have put pressure on the Information Commissioner's Office (ICO), an independent oversight body, by writing to them to ensure they consider the so-called benefits of FRT in fighting retail crime. In late August 2023, the Ministry of Defence and the Home Office called on companies to “help increase the use and effectiveness of facial recognition technologies within UK policing and security” and Chris Philip later acknowledged that all 43 UK territorial police forces were now using retrospective FRT, despite previous police denials to this effect.

Within this context of escalating FRT deployment, PI surveyed UK MPs to ascertain what knowledge legislators have about the increasing use of the surveillance technology and the threats it poses to human rights. We provide an overview of FRT and how it is being used in the UK, the results of PI’s YouGov MP survey, the human rights implications of this technology, and how the UK is lagging behind the rest of the world when it comes to imposing restrictions on its use.

2. What is FRT?

Facial Recognition Technology (FRT) involves the use of cameras (often CCTV cameras) to capture digital images of individuals’ facial features, and the automated processing of these images to identify, authenticate or categorise people. The technology extracts biometric facial data, creates a digital signature of the identified face, stores it and searches records in a database or a watchlist to find a match.

FRT can be live or retrospective. Live FRT captures and stores individuals’ images and facial features and matches them in real time. This means that individuals' faces are processed, stored, and scanned against a database to identify someone on the spot, whereas retrospective FRT processes facial images by checking them against a database at a later time.

This highly invasive technology has the potential to pave the road to a dystopian biometric surveillance state, where everyone is identified and tracked everywhere they go, in real time, as they move through public spaces and get on with their everyday lives. PI is deeply concerned by the way FRT is being used as part of the wider expansion of authoritarian practices which are aimed at executing indiscriminate mass surveillance resulting in an onslaught on our fundamental rights and freedoms.

2.a. How has FRT been used by UK police?

Police forces across the UK have been using FRT in public spaces as far back as 2016. The Metropolitan Police (The Met) have been at the forefront of rolling out FRT across London, including at large scale events such as Notting Hill Carnival and the King's Coronation, as well as holding live facial recognition testing around the city. The Met, a police force which has been shown to be institutionally racist, misogynist and homophobic, is deploying a technology which has also been reported to discriminate against minorities. Now people of colour and other minorities may face a new threat, as the police equip themselves with this authoritarian technology.

South Wales Police, despite being subject to previous legal action for their use of FRT, have continued to deploy the technology at sporting events, concerts and Pride celebrations. Northamptonshire Police used live facial recognition at the 2023 British Grand Prix over two days, which saw 450,000 people attend.

A recent report shows the use of retrospective facial searches conducted by UK police has jumped significantly over the past 5 years. In 2021, 19,827 searches were conducted which jumped to 85,158 searches in 2022. The top five police forces using the technology were West Yorkshire Police; Merseyside Police; Police Scotland; British Transport Police and The Met.

2.b. How has FRT been used by the private sector in the UK?

Many companies in the UK have been reportedly using FRT within their retail outlets. Frasers Group, who own the retail shops House of Fraser, Sports Direct and Flannels, were reported to be using live FRT in 27 of their stores. As a result of these reports, in April 2023 around 50 MPs and peers wrote to Frasers Group, condemning its use. The letter, co-signed by PI, Big Brother Watch and Liberty, highlights the "invasive and discriminatory" nature of live FRT and states that it has "well-evidenced issues with privacy, inaccuracy, and race and gender discrimination [and] inverts the vital democratic principle of suspicion preceding surveillance and treats everyone who passes the camera like a potential criminal".

The Co-op supermarket chain also reportedly has 35 branches using FRT provided by Facewatch. At the time of trialling we wrote to Co-op to demand answers on why they were implementing Facewatch's technology. In 2020, Big Brother Watch lodged a complaint against the Southern Co-op chain with the ICO regarding their use of facial recognition. Even a garden centre in South East London has reportedly implemented FRT and there have been worrying instances of the use of FRT being implemented in UK schools to assist with administrative purposes such as registration and access to free school meals.

Facewatch brands itself as the UK’s “leading facial recognition retail security company” and as a “cloud-based facial recognition security system [that] has helped leading retail stores… reduce in-store theft, staff violence and abuse” and works internationally, with distributors in Argentina, Brazil and Spain. Demand continues to grow for the kind of technology that Facewatch provides. On top of all this, their technical capabilities are expanding. For example, in 2020 they announced that they were in the process of adapting their technology to identify people wearing facemasks.

3. Our YouGov Survey Results: MPs are asleep at the wheel

PI's June 2023 YouGov survey of MPs regarding the use of FRT in the UK reveals an astonishing knowledge gap about FRT amongst MPs, showing that many are sorely misinformed, or entirely unaware, about how and where FRT is being used and the threats it poses. If our legislators and political representatives themselves do not know how or where this technology is being used, under what legislation and to what effect, then is anybody really at the helm? The survey results point towards the disturbing conclusion that the UK is sleepwalking into the mass use of FRT in public spaces.

Respondents to our survey were made up of a random sample of 114 MPs, representative of parties across the UK party political spectrum and from all the devolved nations.

Finding 1: 70% of MPs do not know if FRT has been used in their constituency

A majority of MPs (70%) responded that they did not know whether or not FRT had been deployed in public spaces in their own constituencies. This is remarkable and highly concerning. It means our many of our elected representatives do not know whether the public spaces in their constituencies are under surveillance by FRT. Neither can they therefore understand the impact this has on their constituents’ rights and freedoms.

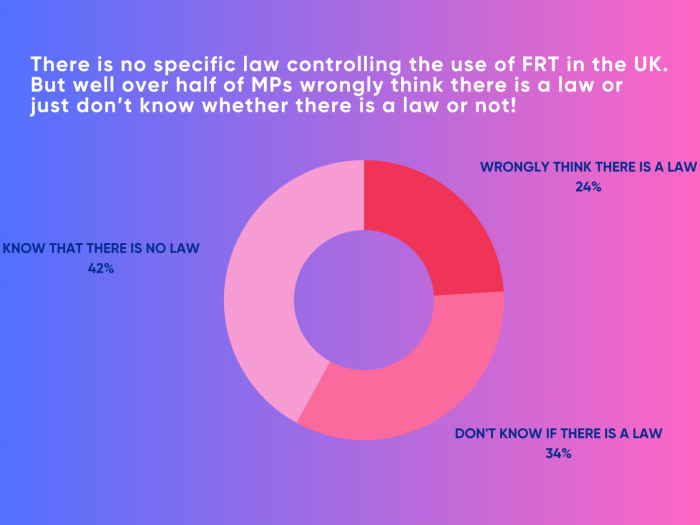

Finding 2: A quarter of MPs incorrectly believe that there is a UK law regulating the use of FRT

There is no specific law in the UK that governs the use of FRT to impose restrictions or safeguards for its use in public spaces. Despite this, the results show that a quarter of MPs incorrectly believe that there is a specific law governing the use of FRT. What’s more, around a third of MPs did not know whether there exists a specific law to govern the use of FRT.

This means that in total, almost 60% of MPs either do not know whether there is such a law or wrongly believe that there is one. This speaks to the fundamental lack of knowledge amongst our elected representatives about FRT. The responses to this survey question demonstrate both complacency and a lack of awareness amongst UK MPs about the absence of legislation explicitly governing the use of this intrusive technology. This is especially concerning, given FRT is already being deployed across the country by the police and private companies, as we discuss in more detail above.

Finding 3: Over a third of MPs know that FRT threatens human rights

The survey results show that just over a third of MPs are aware that FRT threatens our human rights which shows some appreciation and understanding of the concerns associated with such an intrusive technology. However, worryingly, almost half of the MP respondents incorrectly believe that it does not threaten human rights, whilst around one fifth did not know. This means that in total 65% - or almost two thirds - of MPs either are not aware of, or are simply wrong about, the threat the technology poses to human rights. It seems, then, that most MPs are in the dark about the dangers of FRT when it comes to human rights, despite that the human rights concerns have been widely documented, including by the Information Commissioner’s Office, House of Common Committees, and UK courts.

Finding 4: MPs express concerns over FRT in response to PI’s survey

Although the survey indicated a critical knowledge gap amongst MPs when it comes to the use of FRT in the UK and the dangers that come with it, some MP respondents from a range of political parties did express well-founded concerns about the technology. For example, they stated that "[FRT] has been and will be misused, with marginalised sections of society bearing the brunt", asserting that the unregulated use of FRT presents "a severe risk of stereotyping and profiling" and expressing concerns about the use of FRT for “racial profiling” and “by hostile actors or states”.

These fears echo well-documented harms of the use of FRT that have been recorded in the UK and around the world, as we outline in more detail below.

4. FRT threatens our human rights

MPs’ responses to PI’s YouGov survey are especially concerning given the use of FRT has the potential to insidiously dismantle our human rights and democratic agency. Its use in public spaces invades people's privacy, compounds existing inequalities and amounts to mass surveillance. The way FRT has been deployed so far in an arbitrary or arguably unlawful fashion, which lacks transparency and proper justification, means that it is failing to satisfy both international human rights standards and obligations under human rights, equality and data protection law.

The use of FRT directly interferes with individuals’ rights upheld by the European Convention on Human Rights (ECHR). The UK Human Rights Act (HRA) incorporates these convention rights into domestic British law and Section 6 of the Act imposes a duty on public authorities, such as the police, not to act in a way that is incompatible with those rights.

Article 8 of the convention provides the right to a private and family life and dictates that any interference with the right should be subject to the overarching principles of legality, necessity and proportionality. The use of live facial recognition cannot be said to meet these requirements considering it is deployed in public spaces without safeguards or restrictions amounting to mass surveillance. It can also not be said to be strictly necessary as other less intrusive means of locating and identifying suspects could be taken by the police.

Furthermore, the wide deployment of FRT in public spaces, in particular at protests, infringes upon wider rights such as the right to religion, freedom of association, expression and assembly upheld under Articles 9, 10 and 11 of the convention. The presence of FRT at protests may restrict the ability of individuals to freely express their views.

The incompatibility of live FRT with the right to privacy under Article 8 has already been subject to legal challenge in the UK. In 2020, in the case of Ed Bridges v South Wales Police, the Court of Appeal found that the police’s use of FRT breached privacy rights, data protection laws and equality laws. The case was supported by Liberty and brought by campaigner Ed Bridges, who had his biometric facial data scanned by the FRT on a Cardiff high street in December 2017, and again when he was at a protest in March 2018.

The Court agreed that the interference with Article 8 was not in accordance with the law. The Court also found that the Police had not conducted an appropriate Data Protection Impact Assessment in accordance with the Data Protection Act 2018 and that they did not comply with their Public Sector Equality Duty under the Equality Act 2010 to conduct an equality impact assessment.

Yet, the police continue to wrongly justify using FRT through a patchwork of legislation, relying on their common law policing powers and data protection legislation as sufficient in regulating its use. Recently, the Biometrics and Surveillance Camera Commissioner has critiqued the very limited rules that apply to public space surveillance by the police and noted that oversight and regulation in this area is incomplete, inconsistent and incoherent. In this regard, the Commissioner has called on parliament to define and regulate the use of FRT to ensure its accountable and proportionate deployment in appropriate circumstances.

At the international level, the implications of the use of FRT on the right to privacy guaranteed by Article 17 of the International Covenant on Civil and Political Rights, amongst other international human rights instruments to which the UK is a signatory, continues to be raised.

The UN Special Rapporteur on the right to privacy voiced explicit concerns over the use of FRT in public spaces and the collection of biometric data in a 2021 report on the right to privacy in the digital age. The Rapporteur stated that: "[r]ecording, analysing and retaining facial images of individuals without their consent constitute interference with their right to privacy. By deploying facial recognition technology in public spaces, which requires the collection and processing of facial images of all persons captured on camera, such interference is occurring on a mass and indiscriminate scale".

Furthermore, the United Nations High Commissioner for Human Rights, Michelle Bachelet, publicly echoed calls on states made by a UN Human Rights Council report, to “impose moratoriums on the use of potentially high-risk technology, such as remote real-time facial recognition, until it is ensured that their use cannot violate human rights”, pointing towards people being wrongly “arrested because of flawed facial recognition”. The High Commissioner went on to call for greater transparency and express fears that the long-term storage of data obtained by such technology “could in the future be exploited in as yet unknown ways”.

The use of FRT is also deeply problematic due to biases and inaccuracies of the technology, which has been shown to misidentify people and particularly women, black people and people from other ethnic minorities. The Met’s own statistics show live facial recognition to be over 80% inaccurate and more likely to misidentify people. Indeed, eight trials carried out by the Met Police in London from 2016 to 2018 at the taxpayer’s expense resulted in a 96% rate of “false positives”, with some incidents of deployment recording 100% failure. In one case, a 14-year-old black schoolboy was fingerprinted by the Met Police after he was misidentified by live FRT. Despite these astonishing rates of inaccuracy, the Met continues to deploy FRT. Such discriminatory biases and inaccuracies mean the use of the pernicious technology could have significant consequences for those with protected characteristics under the Equality Act 2010, and risks infringing upon their fundamental human right to non-discrimination as enshrined in both national and international law.

5. The UK is behind the curve

Whilst national and regional authorities around the world take steps to ban, curtail and limit the use of FRT, the UK appears to be moving in the opposite direction.

In April 2021, the European Commission proposed the first EU regulatory framework for Artificial Intelligence. The Bill proposes that Artificial Intelligence (AI) systems used in different applications should be analysed and classified according to the risk they pose to users. It would place significant limitations on the use FRT.

The Bill has been scrutinised by the European Parliament and considerably amended by MEPs. MEPs endorsed new transparency and risk-management rules for AI systems, bans on intrusive and discriminatory uses of AI systems, tailor-made regimes for general-purpose AI and foundation models like GPT and the right to make complaints about AI systems.

The ban on intrusive and discriminatory AI covers FRT and includes:

- ”Real-time” remote biometric identification systems in publicly accessible spaces;

- “Post” remote biometric identification systems, with the only exception of law enforcement for the prosecution of serious crimes and only after judicial authorization;

- Biometric categorisation systems using sensitive characteristics (e.g. gender, race, ethnicity, citizenship status, religion, political orientation);

- Predictive policing systems (based on profiling, location or past criminal behaviour);

- Emotion recognition systems in law enforcement, border management, workplace, and educational institutions; and;

- Indiscriminate scraping of biometric data from social media or CCTV footage to create facial recognition databases (violating human rights and right to privacy).

Furthermore, we have seen outright bans on the use of FRT in public spaces throughout the United States (US). The US city San Francisco took the lead on banning FRT, which was followed by 16 other cities, from Oakland to Boston, adopting bans between 2019 to 2021. In 2021, Virginia and Vermont banned FRT for law enforcement purposes, whilst the US State Illinois has banned the use of FRT by private companies.

Given such developments across Europe and the US, the UK is falling behind the curve.

6. Conclusion

The unsettling results of our YouGov survey of MPs convey they are largely unaware and uninformed about the expansion of FRT in the UK. The UK is falling behind international developments and UK MPs are sleepwalking into public mass surveillance when it comes to the propagation of this authoritarian technology, which poses a serious threat to our human rights and society.

To protect people’s rights and prevent against abuses, it is vital for the public to have a meaningful say in whether their local police force should be allowed to use such highly intrusive technologies. We believe such highly intrusive technologies should not be used without robust and transparent public consultation and the approval of locally elected representatives.

In the coming months, considering these survey results, PI will be calling on the public to raise their voice and make their concerns heard over the unfettered use of FRT around the UK. We are calling on the public to use their constituent power to call upon their MPs to give this matter the attention it deserves and take action. Watch this space.