The ICO’s announcement about Clearview AI is a lot more than just a £17 million fine

Following PI’s submissions before the UK Information Commissioner’s Office (ICO), as well as other European regulators, the ICO has announced its provisional intent to fine facial recognition company Clearview AI. But this is more than just a regulatory action.

In May 2021, PI, together with 3 other digital rights organisations, submitted complaints before 5 European data privacy regulators against Clearview AI, Inc. ("Clearview"), a facial recognition technology company building a gigantic database of 10 billion + faces. The complaints were filed with the Information Commissioner's Office (ICO) (UK), the Commission Nationale de l'Informatique et des Libertés (CNIL) (France), the Garante per la protezione dei dati personali (Italy), Αρχή Προστασίας Δεδομένων Προσωπικού Χαρακτήρα (Hellenic Data Protection Authority) (Greece) and the Datenschutzbehörde (Austria).

On 29 November 2021, the UK data protection authority (ICO) announced that it had found "alleged serious breaches of the UK's data protection laws", and issued a provisional notice to stop further processing of the personal data of people in the UK and to delete it. It also announced its "provisional intent to impose a potential fine of just over £17 million" on Clearview AI.

The ICO’s provisional findings largely reflect the arguments put forward in the submissions we made before it last May.

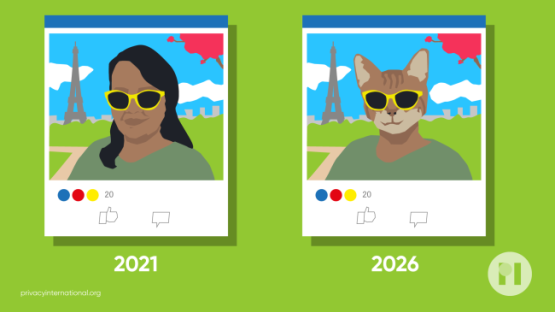

What if we told you that every photo of you, your family, and your friends posted on your social media or even your blog could be copied and saved indefinitely in a database with billions of images of other people, by a company you've never heard of? And what if we told you that this mass surveillance database was pitched to law enforcement and private companies across the world?

This is more or less the business model and aspiration of Clearview AI, a company that only received worldwide attention following a New York Times investigation back in January 2020. The reports that followed about the company's alleged law enforcement and police clients triggered a plethora of investigations by data privacy regulators across the globe, with most of them condemning the company's practices. The UK independent data protection regulator's announcement last week, which comes as a result of a joint investigation with its Australian counterpart, follows suit. The Information Commissioner's Office (ICO) has found that Clearview AI appears to have failed to comply with UK data protection laws in several ways. It has issued a provisional notice to stop further processing of the personal data of people in the UK and to delete it.

But, the announcement is more than just a possibility of a harsh fine; it is modern democracies' response to a toxic business model that expects us to give up on every notion of privacy in online public spaces, with potential consequences for our offline lives as well.

Innovations, like facial recognition, have inspired dozens of start ups to start pitching their AI-based tech to governments across the world, promising big results while deploying untested -and quite often biased- solutions to ill-defined problems. For example, Amazon has made a big deal about its partnerships with police forces to hand out its Ring video doorbells to people in the UK for free. What the COVID-19 pandemic unsurprisingly revealed was a great appetite to advance this corporate surveillance opportunism which in reality sought to further curtail our freedoms under the cloak of defending them.

All these surveillance fads rely on a faulty, but rather simple premise: If you are out in public or post something online, you are fair game for them to exploit you and your data for their advantage! This is what Clearview says. In defence to the series of challenges brought against it, the company has stated that it only collects "publicly available information" and that governments have expressed a "dire need" for their technology. So, does this allow Clearview to ‘steal' data that does not belong to it and use it as they see fit so that they can set up and profit from shady agreements with law enforcement?

Arguments like these have, thankfully, failed to convince courts and regulators. As the Canadian Privacy Commissioner recently put it, "individuals who posted their images online, or whose images were posted by third party(ies), had no reasonable expectations that Clearview would collect, use and disclose their images for identification purposes". This is why the ICO announcement, albeit welcomed, does not come as a surprise. It does nothing more than apply existing privacy protections to a growing problem.

If anything, the ICO’s provisional sanction should be viewed as a strong wake up call. A wake up call for investors who turn a blind eye on the implications of extremely intrusive technologies, a wake up call for governments who indulge in surveillance outsourcing for their dirty work. A wake up call for policymakers and regulators who argue we need new laws to protect us from malevolent AI, when the existing ones can do the job pretty well, if properly enforced by independent regulators that are not afraid to issue meaningful fines.

More importantly, this should be a wake up call for all of us as citizens and activists. Companies like Clearview AI that lurk in the background and track us online have no place in modern society.