Search

Content type: Examples

As speech recognition and language-processing software continue to improve, the potential exists for digital personal assistants - Apple's Siri, Amazon's Alexa, and Google Assistant - to amass deeper profiles of customers than has ever been possible before. A new level of competition arrived in 2016, when Google launched its Home wireless speaker into a market that already included the Amazon Echo, launched in 2014. It remained unclear how much people would use these assistants and how these…

Content type: Examples

In 2016, Facebook and Google began introducing ways to measure the effectiveness of online ads by linking them to offline sales and in-store visits. Facebook's measurement tools are intended to allow stores to see how many people visit in person after seeing a Facebook campaign, and the company offered real-time updates and ad optimisation. Facebook noted that information will only be collected from people who have turned on location services on their phones. The company also offered an Offline…

Content type: Examples

By 2016, numerous examples had surfaced of bias in facial recognition systems that meant they failed to recognise non-white faces, labelled non-white people as "gorillas", "animals", or "apes" (Google, Flickr), told Asian users their eyes were closed when taking photographs (Nikon), or tracked white faces but couldn't see black ones (HP). The consequences are endemic unfairness and a system that demoralises those who don't fit the "standard". Some possible remedies include ensuring diversity in…

Content type: Examples

In 2015, ABI Research discovered that the power light on the front of Alphabet's Nest Cam was deceptive: even when users had used the associated app to power down the camera and the power light went off, the device continued to monitor its surroundings, noting sound, movement, and other activities. The proof lay in the fact that the device's power drain diminished by an amount consistent with only turning off the LED light. Alphabet explained the reason was that the camera had to be ready to be…

Content type: Examples

In the 2014 report "Networked Employment Discrimination", the Future of Work Project studied data-driven hiring systems, which often rely on data prospective employees have no idea may be used, such as the results of Google searches, and other stray personal data scattered online. In addition, digital recruiting systems that only accept online input exclude those who do not have internet access at home and must rely on libraries and other places with limited access and hours to fill in and…

Content type: Examples

In 2012, London Royal Free, Barnet, and Chase Farm hospitals agreed to provide Google's DeepMind subsidiary with access to an estimated 1.6 million NHS patient records, including full names and medical histories. The company claimed the information, which would remain encrypted so that employees could not identify individual patients, would be used to develop a system for flagging patients at risk of acute kidney injuries, a major reason why people need emergency care. Privacy campaigners…

Content type: Examples

In April 2016, Google's Nest subsidiary announced it would drop support for Revolv, a rival smart home start-up the company bought in 2014. After that, the company said, the thermostats would cease functioning entirely because they relied on connecting to a central server and had no local-only mode. The decision elicited angry online responses from Revolv owners, who criticised the company for arbitrarily turning off devices that they had purchased. The story also raised wider concerns about…

Content type: Impact Case Study

What is the problem

Business models of lots of companies is based on data exploitation. Big Tech companies such Google, Amazon, Facebook; data brokers; online services; apps and many others collect, use and share huge amounts of data about us, frequently without our explicit consent of knowledge. Using implicit attributes of low-cost devices, their ‘free’ services or apps and other sources, they create unmatched tracking and targeting capabilities which are being used against us.

Why it is…

Content type: News & Analysis

This op-ed originally appeared in the Huffington Post.

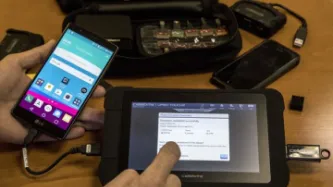

As technologies used by the police race ahead of outdated legislation, we are left vulnerable to potential for misuse and abuse of our data

The vast quantities of data we generate every minute of the day and how it can be exploited is challenging democratic and societal norms. The use by UK police forces of technologies that provide access to data on our phones, which document everything we do, everywhere we go, everyone we interact with…

Content type: Long Read

In December 2017, Privacy International published an investigation into the use of data and microtargeting during the 2017 Kenyan elections. Cambridge Analytica was one of the companies that featured as part of our investigation.

Due to the recent reporting on Cambridge Analytica and Facebook, we have seen renewed interest in this issue and our investigation. Recently in March of 2018, Channel 4 News featured a report on micro targeting during the 2017 Kenyan Presidential Elections, and the…

Content type: News & Analysis

Written by the Foundation for Media Alternatives

7:01: Naomi wakes up and gets ready for the day.

7:58: Naomi books an Uber ride to Bonifacio Global City (BGC), where she has a meeting. She pays with her credit card. While Naomi is waiting for her Uber, she googles restaurant options for her dinner meeting in Ortigas.

9:00: While her Uber ride is stuck in traffic on EDSA (a limited access highway circling Manilla), Naomi’s phone automatically connects to the free WiFi offered by the…

Content type: Legal Case Files

Investigatory Powers Tribunal

Case No. IPT/13/92/CH

Status: On Appeal

In July 2013, Privacy International filed a complaint before the Investigatory Powers Tribunal (IPT), challenging two aspects of the United Kingdom's surveillance regime revealed by the Snowden disclosures: (1) UK bulk interception of internet traffic transiting undersea fibre optic cables landing in the UK and (2) UK access to the information gathered by the US through its various bulk surveillance programs. Our complaint…

Content type: News & Analysis

Simply put, the National Security Agency is an intelligence agency. Its purpose is to monitor the world's communications, which it traditionally collected by using spy satellites, taps on cables, and placing listening stations around the world.

In 2008, by making changes to U.S. law, the U.S. Congress enabled the NSA to make U.S. industry complicit in its mission. No longer would the NSA have to rely only on international gathering points. It can now go to domestic companies who hold massive…

Content type: News & Analysis

7 October 2013

The following is an English version of an article in the September issue of Cuestión de Derechos, written by Privacy International's Head of International Advocacy, Carly Nyst.

To read the whole article (in Spanish), please go here.

The Chinese government installs software that monitors and censors certain anti-government websites. Journalists and human rights defenders from Bahrain to Morocco have their phones tapped and their emails read by security services. Facebook…

Content type: News & Analysis

This post was written by Chair Emeritus of PI’s Board of Trustees, Anna Fielder.

The UK Data Protection Bill is currently making its way through the genteel debates of the House of Lords. We at Privacy International welcome its stated intent to provide a holistic regime for the protection of personal information and to set the “gold standard on data protection”. To make that promise a reality, one of the commitments in this government’s ‘statement of intent’ was to enhance…